Workshop Logistics

Accessing an AWS environment

For the FortiGate Autoscale Tec Recipe, you will need the following:

- AWS sign in link

- IAM User w/ console access

- Password for the IAM User

- Two properly sized ITF’d BYOL licenses for Fortigate VM (recommend unlimited CPUs to avoid sizing confusion)

Navigating the AWS Console

When you first login you will see the Console Home page.

Use the Search Box at the top to search for services such as EC2, VPC, Cloud Shell, etc.

When the results pop up, right click the name of the service and open the desired console in a new tab. This makes navigation easier.

AWS Networking Concepts

Before diving into the reference architecture for this workshop, let’s review core AWS networking concepts.

AWS Virtual Private Cloud (VPC) is a logically isolated section of the AWS Cloud where you can launch AWS resources in a virtual network that you define. You have complete control over your virtual networking environment, including selection of your own IP address range, creation of subnets, and configuration of route tables and network gateways.

Availability zones (AZ) are multiple, isolated locations within each Region that have independent power, cooling, physical security, etc. A VPC spans all of the AZs in the Region.

Region, is a collection of multiple regional AZs in a geographic location. The collection of AZs in the same region are all interconnected via redundant, ultra-low-latency networks.

All subnets within a VPC are able to reach each other with the default or intrinsic router within the VPC. All resources in a subnet use the intrinsic router (1st host IP in each subnet) as the default gateway. Each subnet must be associated with a VPC route table, which specifies the allowed routes for outbound traffic leaving the subnet.

An Internet Gateway (IGW) is a horizontally scaled, redundant, and highly available VPC component that allows communication between instances in your VPC and the internet. It therefore imposes no availability risks or bandwidth constraints on your network traffic.

AWS NAT Gateway (NAT GW) is a Network Address Translation (NAT) service. You can use a NAT gateway so that instances in a private subnet can connect to services outside your VPC but external services cannot initiate a connection with those instances.

AWS Transit Gateway (TGW) is a highly scalable cloud router that connects your VPCs in the same region to each other, to on-premise networks, and even to the internet through one hub. With the use of multiple route tables for a single TGW, you can design hub and spoke routing for traffic inspection and enforcement of security policy across multiple VPCs.

AWS (Gateway Load Balancer (GWLB) is a transparent network gateway that distributes traffic (in a 3/5 tuple flow aware manner) to a fleet of virtual appliances for inspection. This is a regional load balancer that uses GWLB endpoints (GWLBe) to securely intercept data plane traffic within consumer VPCs in the same region.

In this workshop we will use all these components to test FortiGate Autoscale in an enterprise design.

AWS Common Architecture Patterns

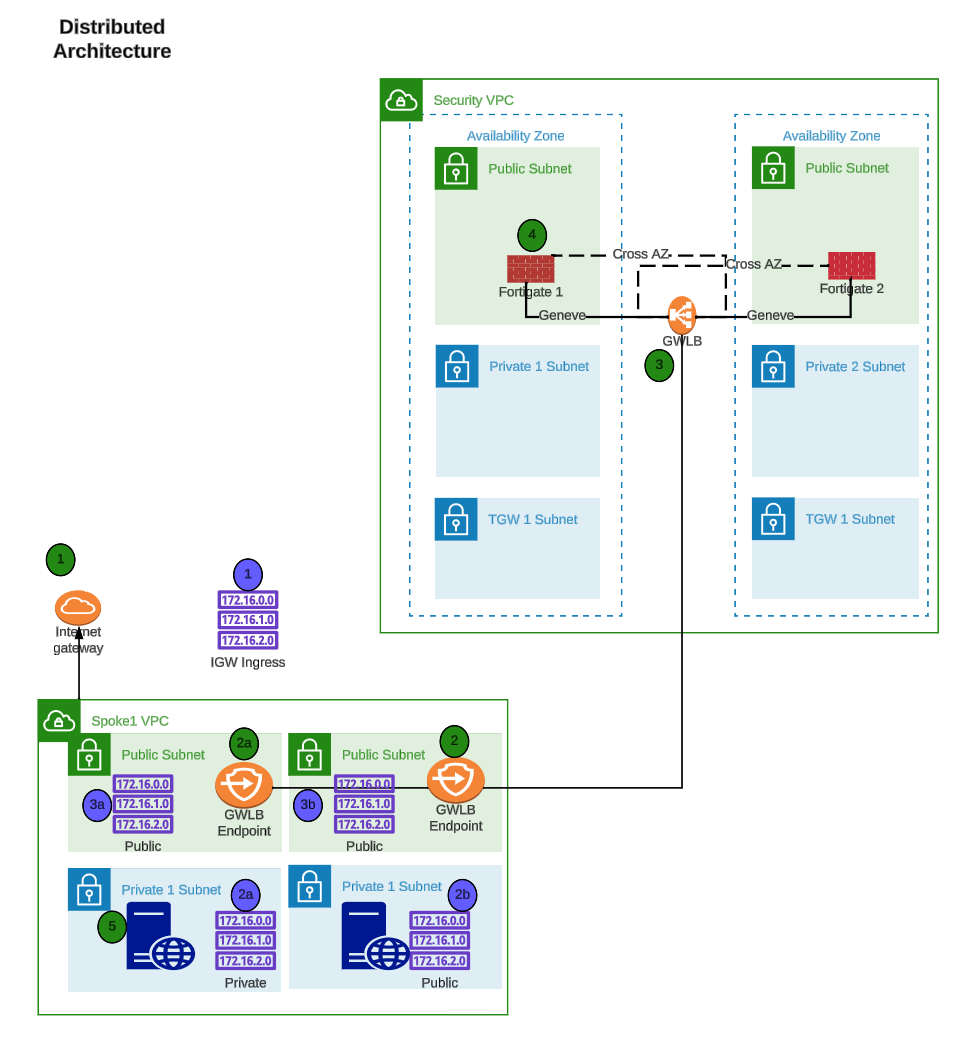

While there are many ways to organize your infrastructure, there are two main ways to design your networking when using GWLB:

We will discuss this further below.

FortiGate Autoscale uses FortiGates on the backend and routes all traffic through the Fortigates for traffic inspection. AWS GWLB, and GWLB endpoints to intercept customer traffic and inspect this traffic transparently. As part of the deployment process for FortiGate Autoscale instances, the customer environment will need to implement VPC and ingress routing at the IGW to intercept the traffic to be inspected.

The FortiGate Autoscale security stack, which includes the AWS GWLB and other components, will be deployed in a centralized inspection VPC. The details of the diagram are simply an example of the main components used in FortiGate Security VPC Autoscale stack.

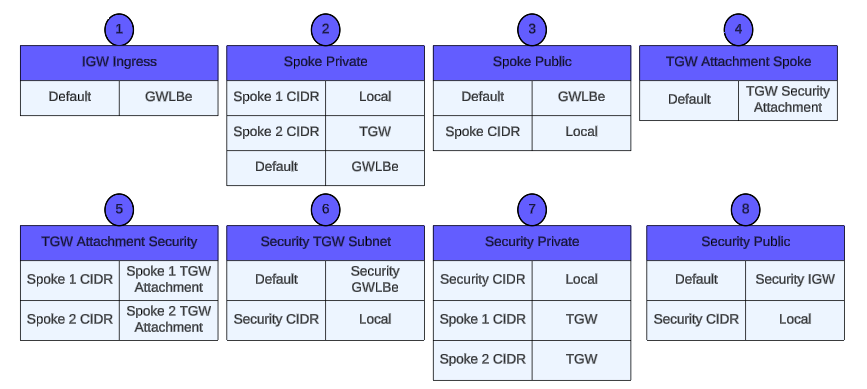

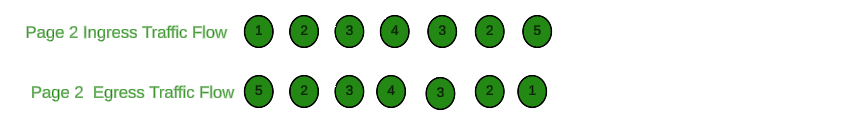

The following diagrams and paragraphs will explain what happens when customer traffic is received at the FortiGate Autoscale GWLB.

Decentralized designs do not require any routing between the protected VPC and another VPC through TGW. These designs allow simple service insertion with minimal routing changes to the VPC route table. The yellow numbers show the initial packet flow for a session and how it is routed (using ingress and VPC routes) to the GWLBe endpoint which then sends traffic to the FortiGate CNF stack. The blue numbers show the returned traffic after inspection by the FortiGate CNF stack.

Centralized designs require the use of TGW to provide a simple hub and spoke architecture to inspect traffic. These can simplify east-west and egress traffic inspection needs while removing the need for IGWs and NAT Gateways to be deployed in each protected VPC for egress inspection. You can still mix a decentralized architecture to inspect ingress and even egress traffic while leveraging the centralized design for all east-west inspection.

The yellow numbers show the initial packet flow for a session and how it is routed (using ingress, VPC routes, and TGW routes) to the GWLBe which then sends traffic to the FortiGate Autoscale stack. The blue numbers (east-west) and purple numbers (egress) show the returned traffic after inspection by the FortiGate Autoscale Group.