Subsections of FortiGates & GWLB HA in AWS

Introduction

Welcome

The purpose of this site is to provide documentation on how FortiGates and GWLB works in AWS post deployment, during a failover event, and best practice for common use cases.

For other documentation needs such as FortiOS administration, please reference docs.fortinet.com.

Overview

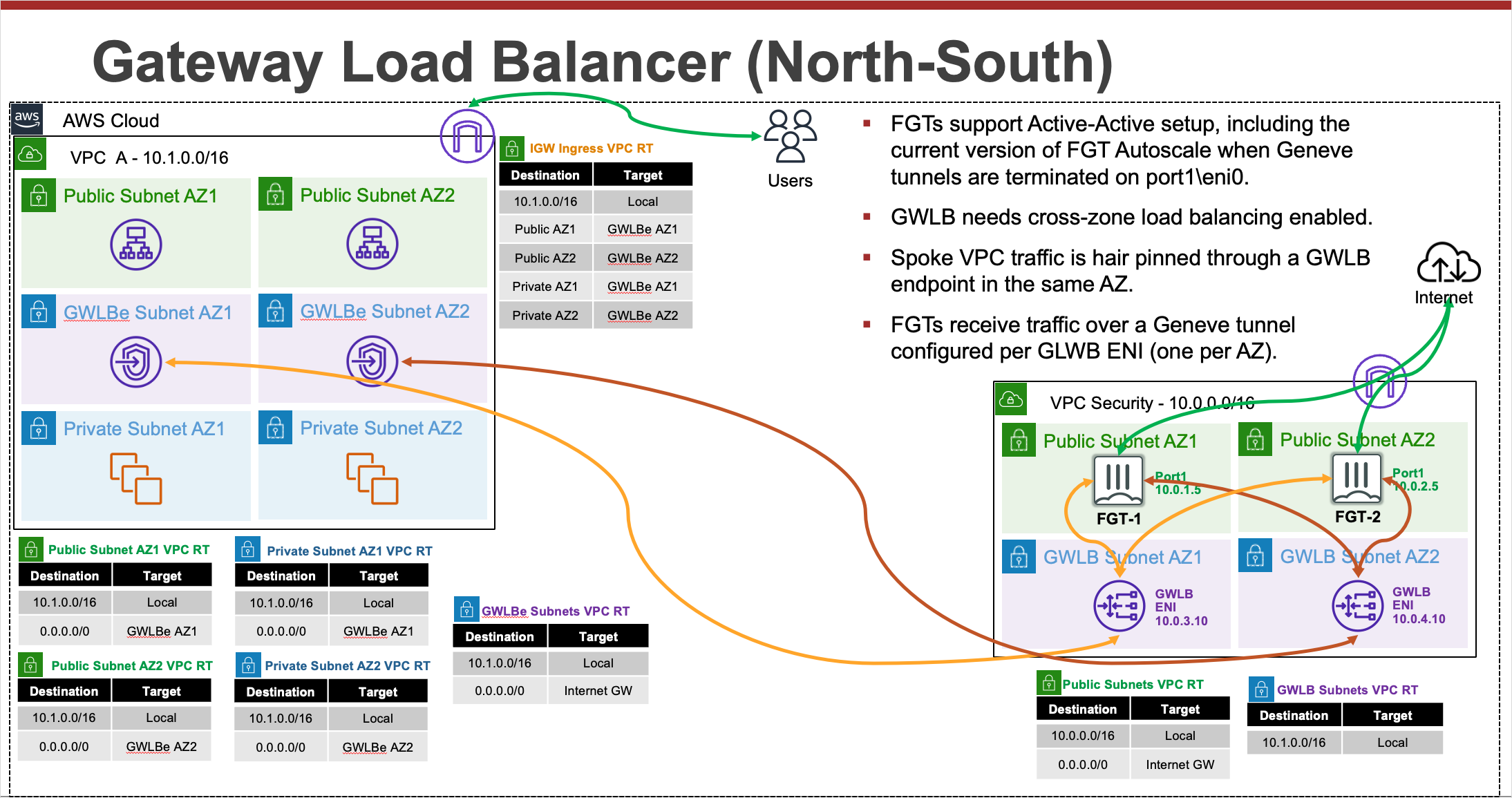

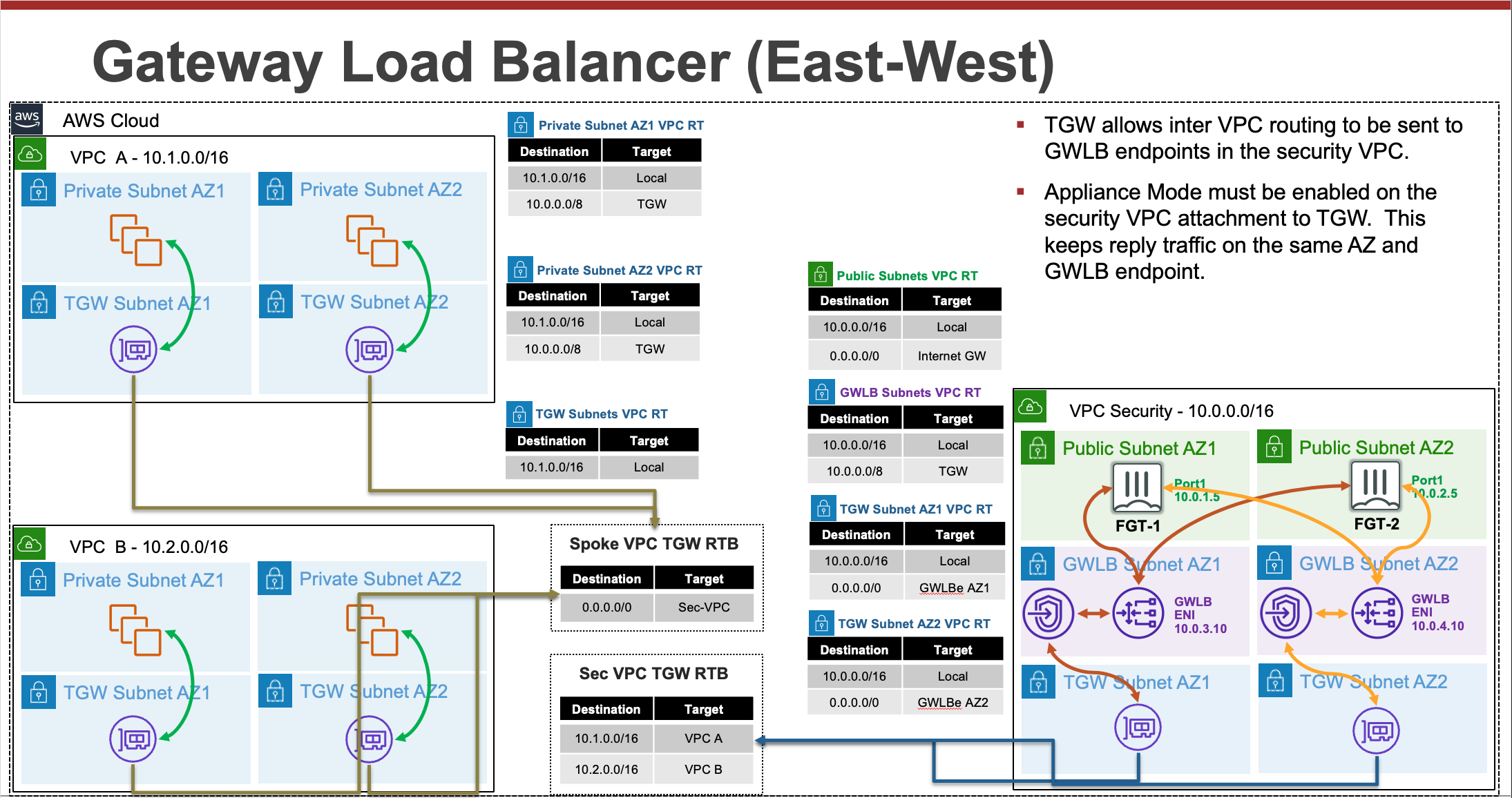

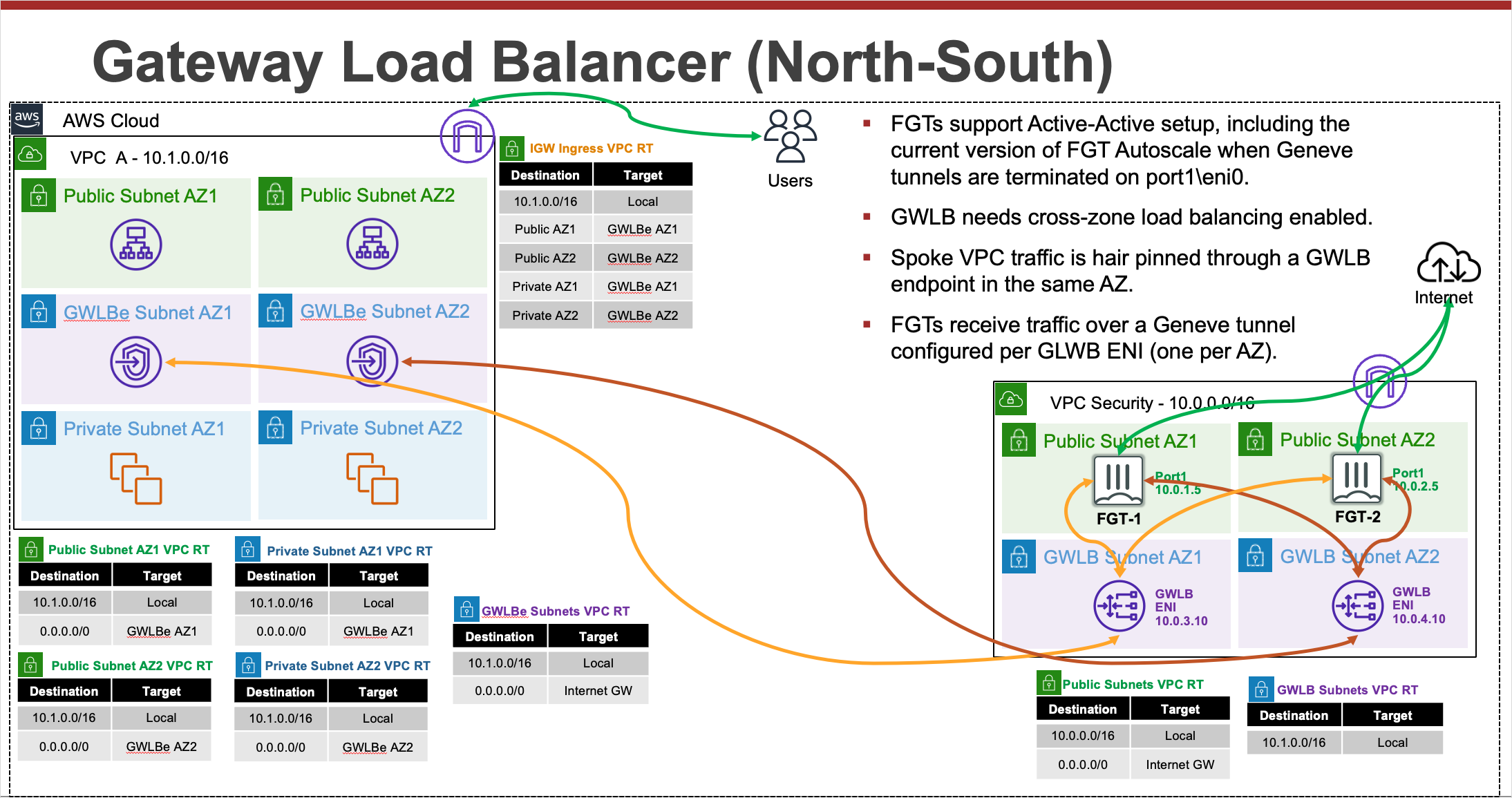

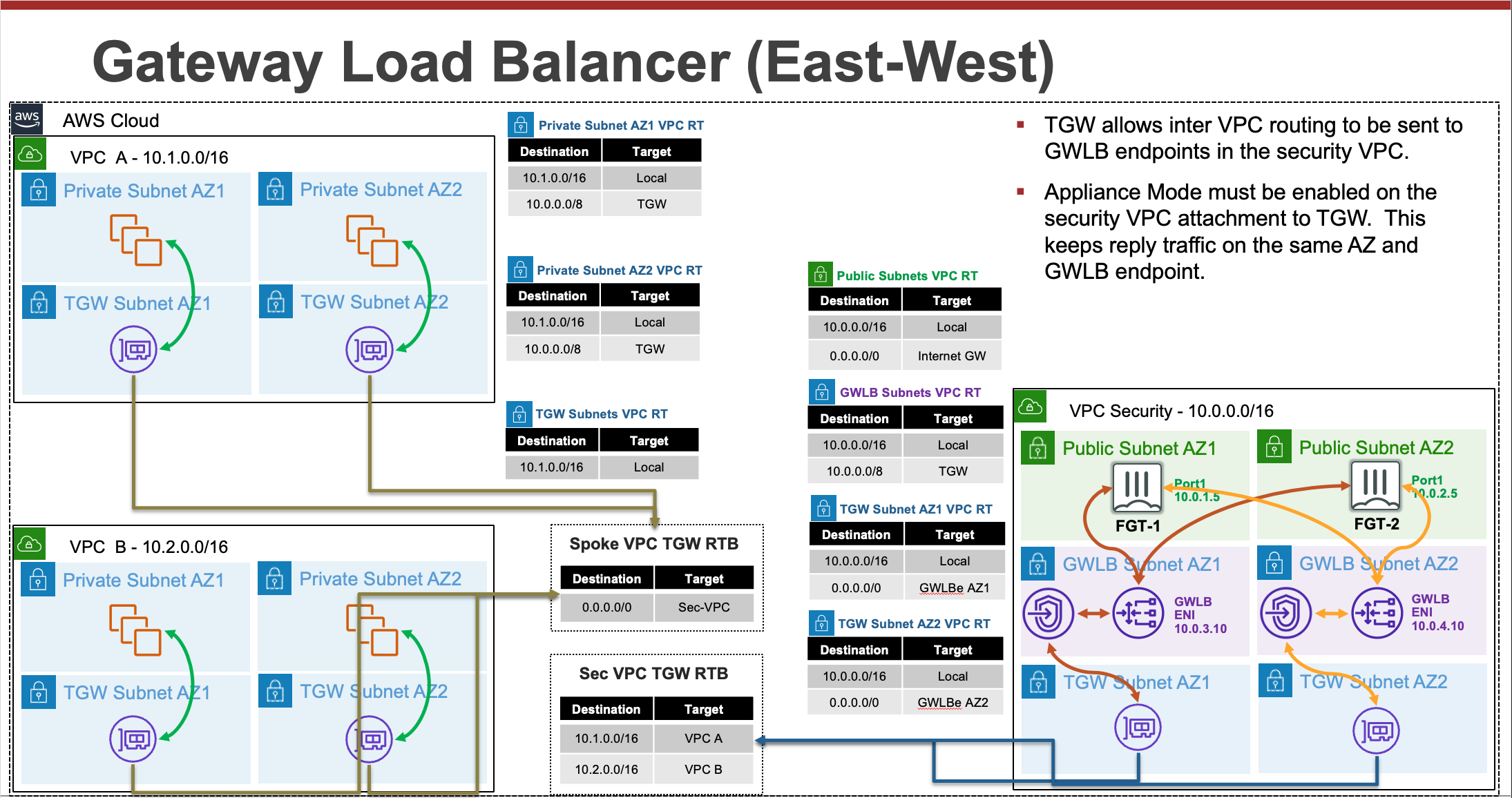

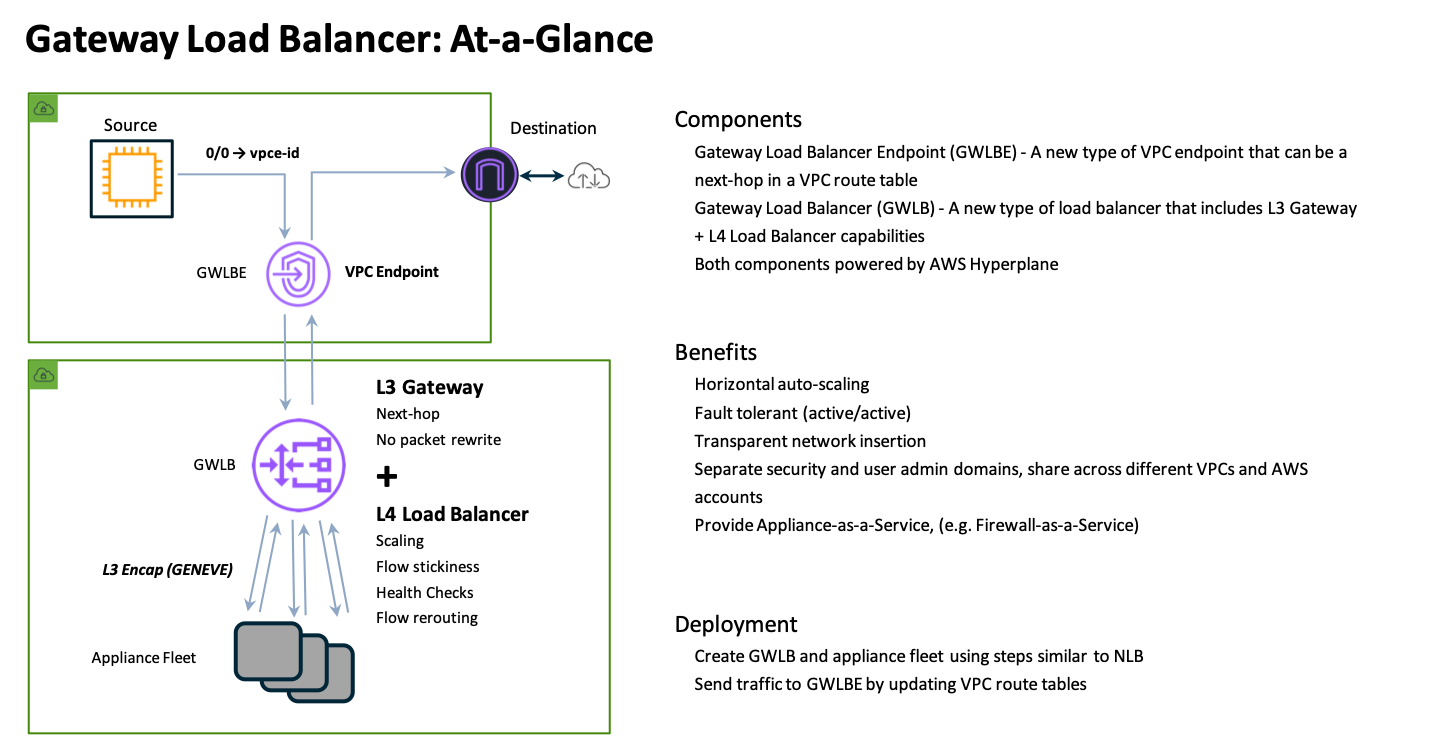

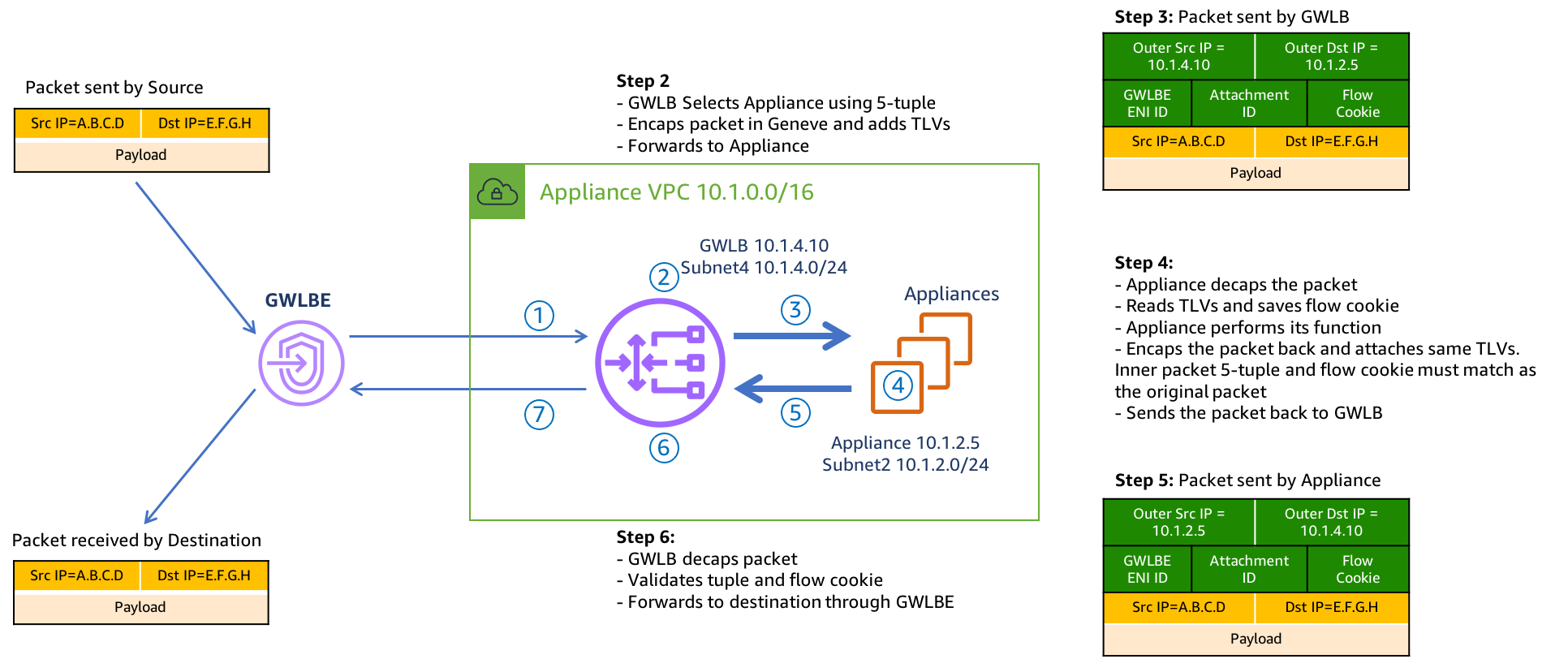

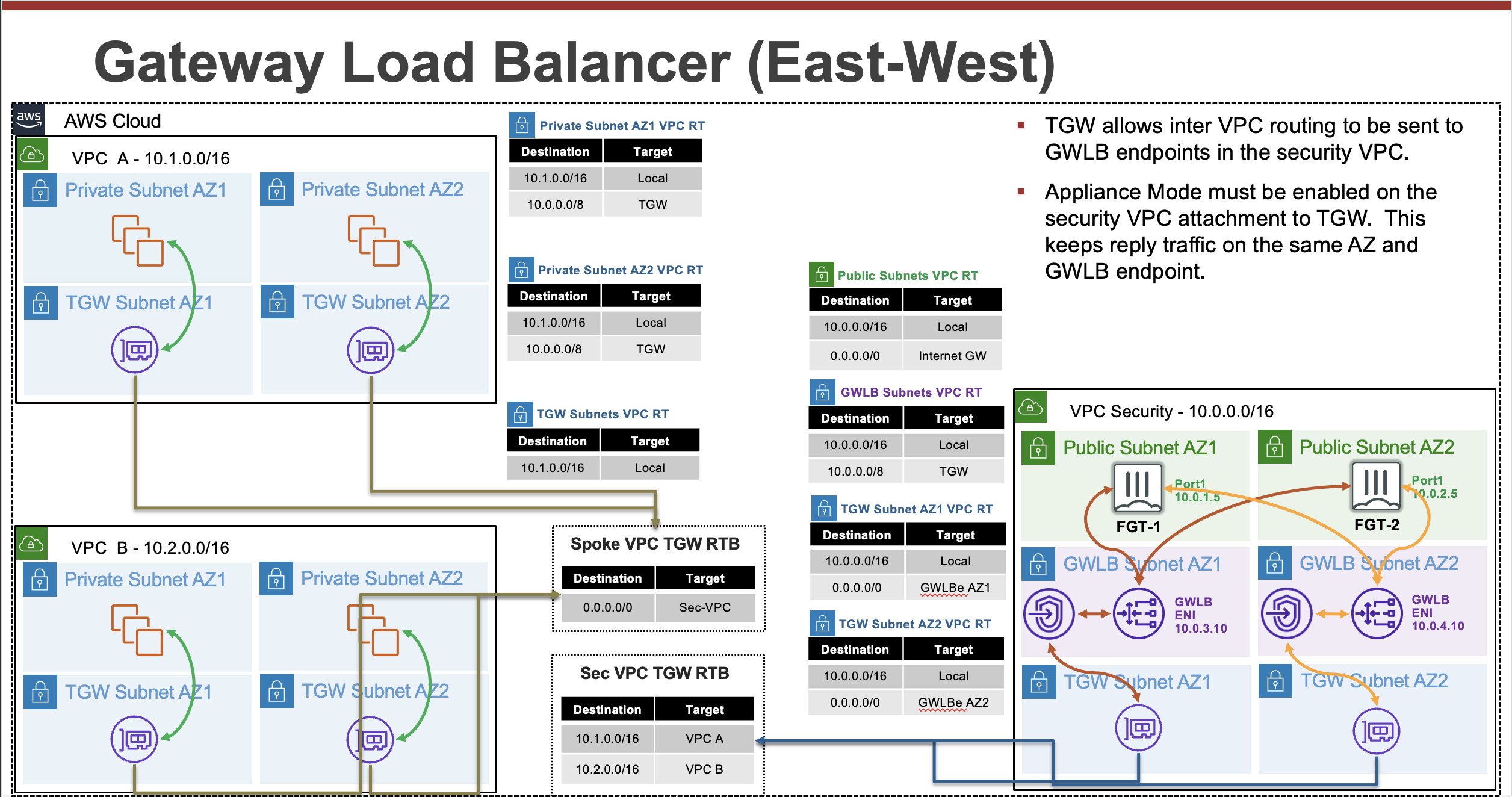

FortiOS supports integrating with AWS Gateway Load Balancer (GWLB) starting in version 6.4.4 GA and newer versions. GWLB makes it easier to inspect traffic with a fleet of active-active FortiGates. GWLB track flows and sends traffic for a single flow to the same FortiGate so there is no need to apply source NAT to have symmetrical routing. Also with the use of VPC Ingress Routing and GWLB endpoints (GWLBe), you can easily use VPC routes to granularly direct traffic to your GWLB and FortiGates for transparent traffic inspection.

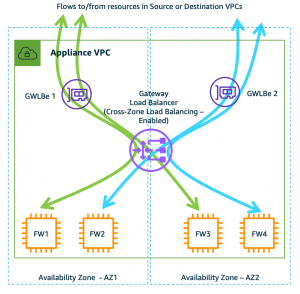

This solution works with a fleet of FortiGates deployed in multiple availability zones. The FortiGates can be a set of independent FortiGates or even a clustered auto scale group.

The main benefits of this solution are:

- Active-Active scale out design for traffic inspection

- Symmetrical routing of traffic without the need for source NAT

- VPC routing is easily used to direct traffic for inspection

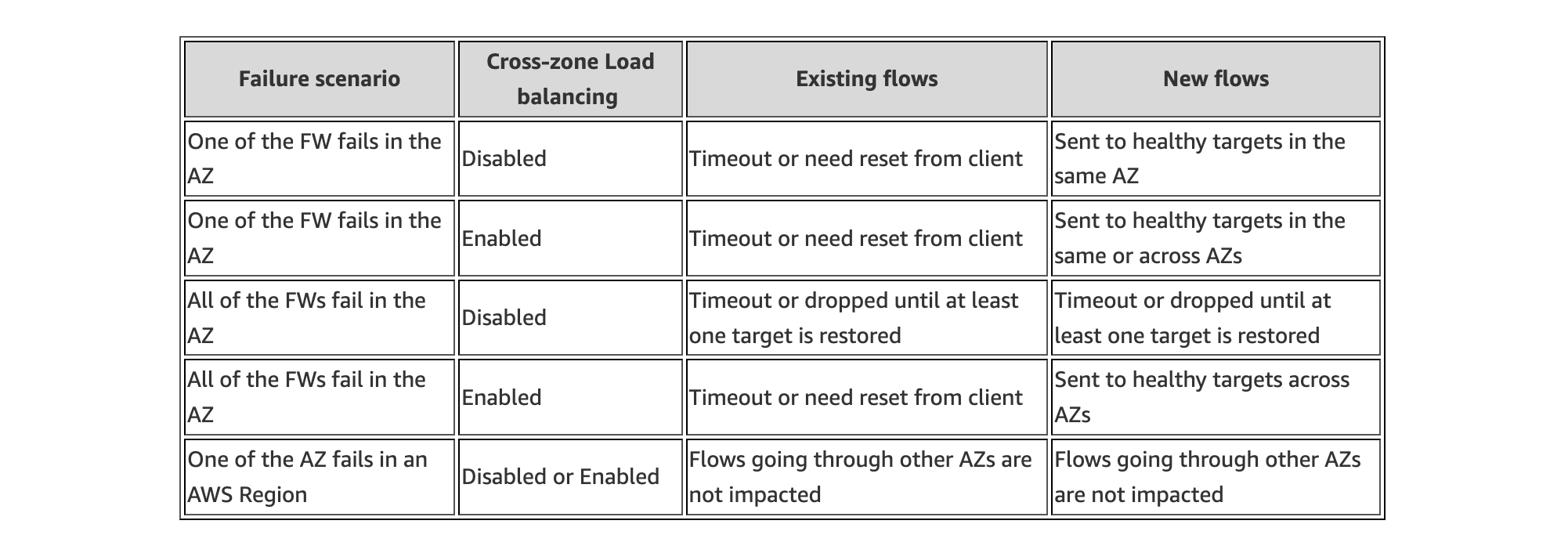

- Support for cross zone load balancing and failover

Note: Other Fortinet solutions for AWS such as FGCP HA (Dual or Single AZ) and Auto Scaling are available. Please visit www.fortinet.com/aws for further information.

Solution Components

Expand each section to see the details.

Failover Process

Expand each section to see the details.

Templates

It is best practice to use Infrastructure as Code (IaC) templates to deploy FortiGates & GWLB in AWS as there are quite a bit of components that make up the entire solution. These can be used to deploy a new VPC. You can also integrate with a new or existing Transit Gateway as well.

Reference the CloudFormation and Terraform templates in the Github repos below and reference the quick start guides for how to use these for a deployment.

Note

You will need administrator privileges to run these templates as they are creating IAM roles, policies, and other resources that are required for the solution and automating deployment.

fortigate-aws-gwlb-cloudformation

fortigate-aws-gwlb-terraform

Post Deployment

Note

This is picking up after a successful deployment of FGCP with CloudFormation. The same steps can be used, post deployment, to validate a successful setup and test failover.

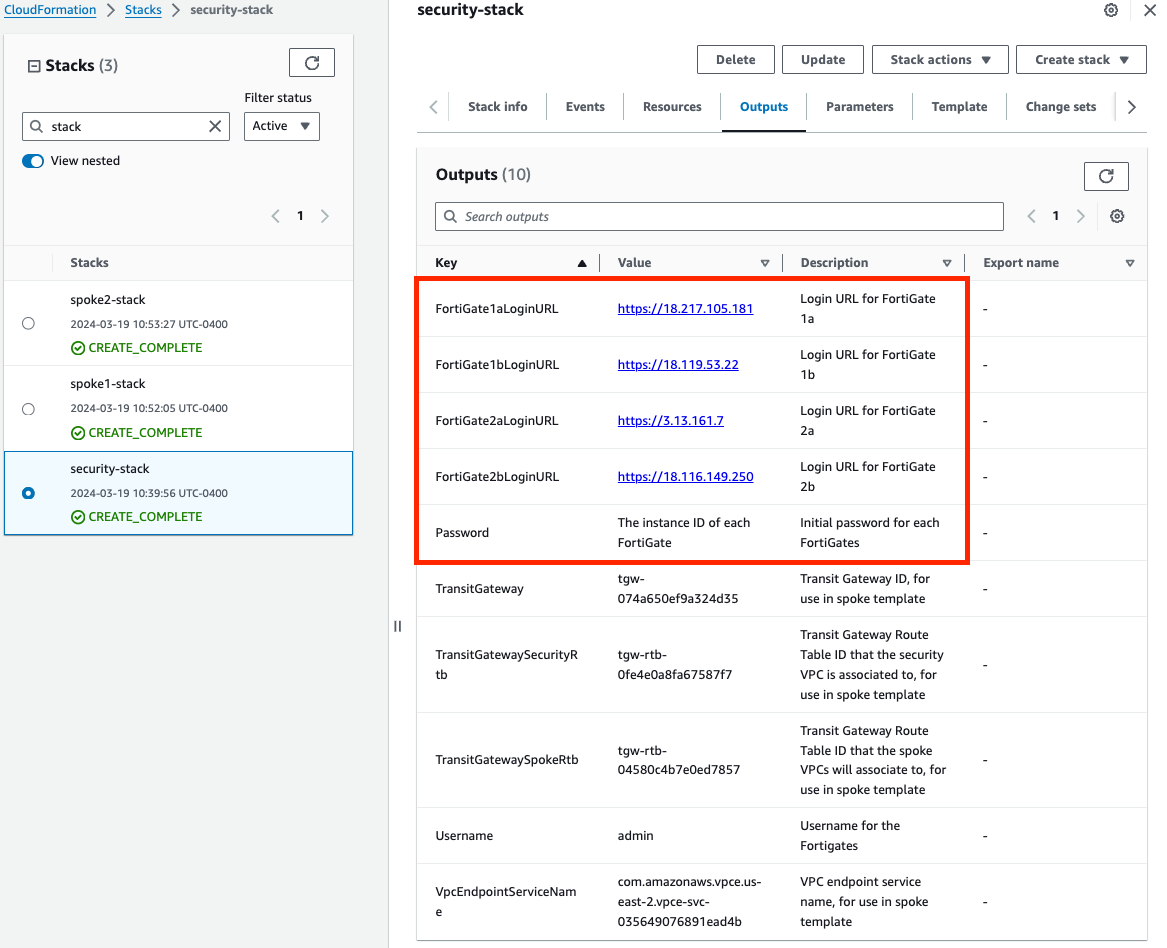

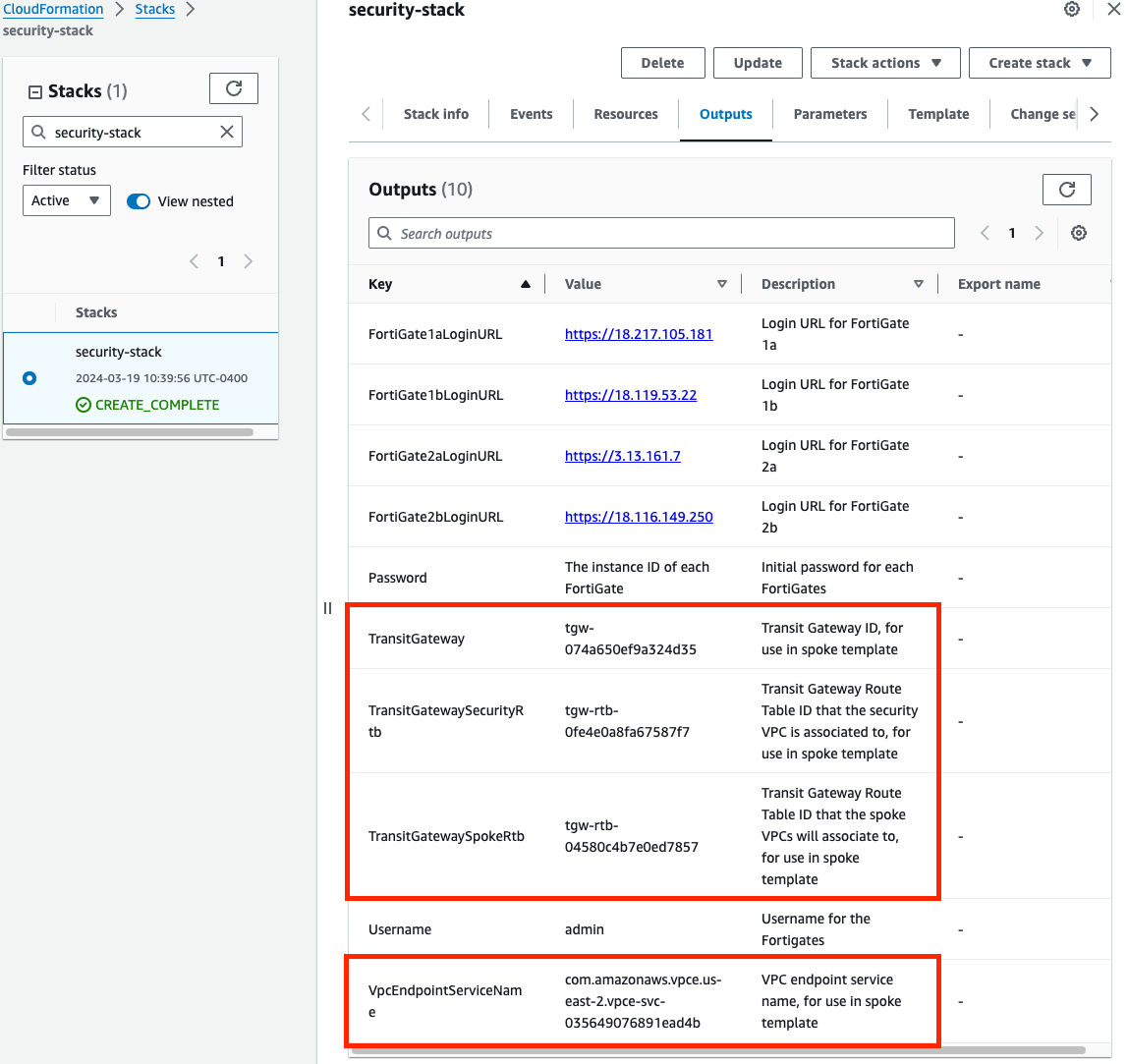

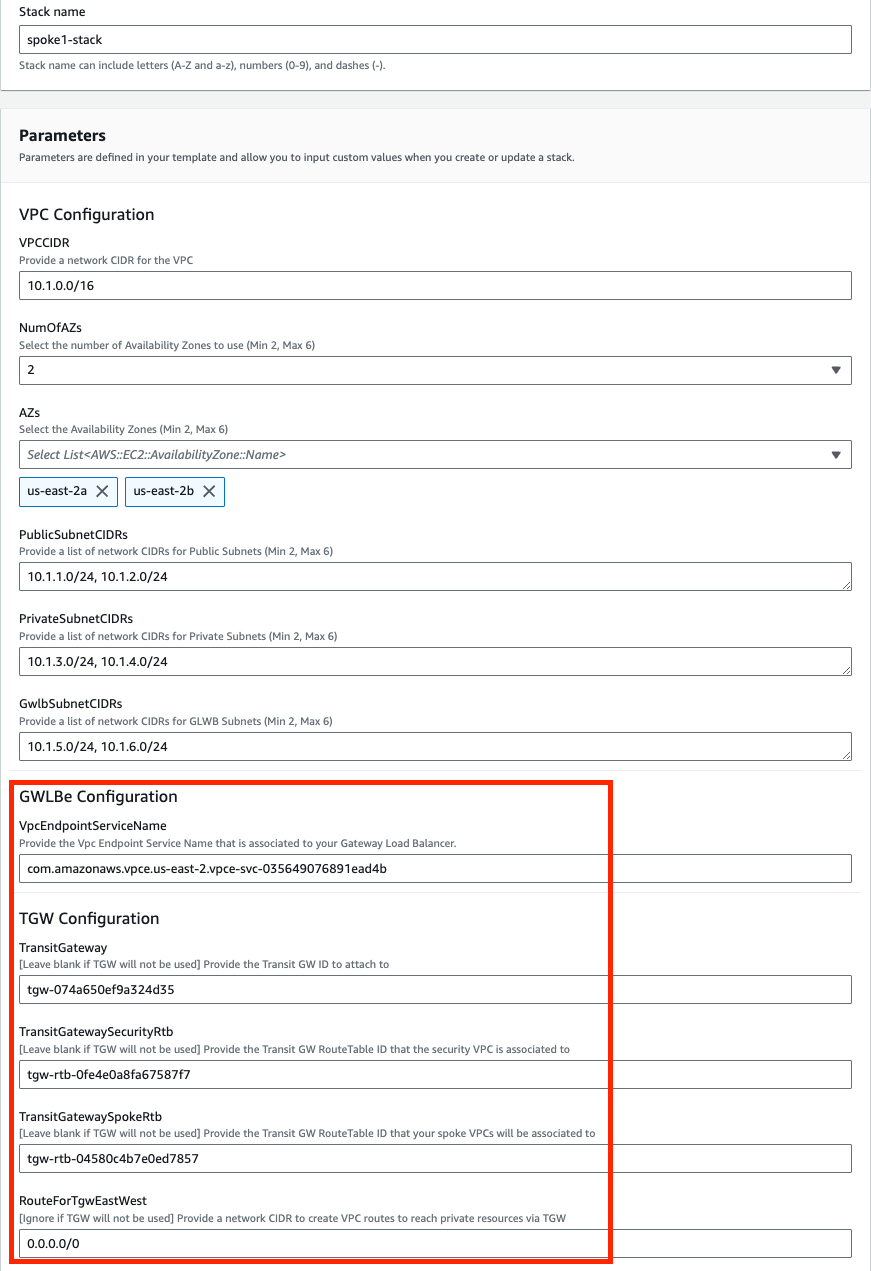

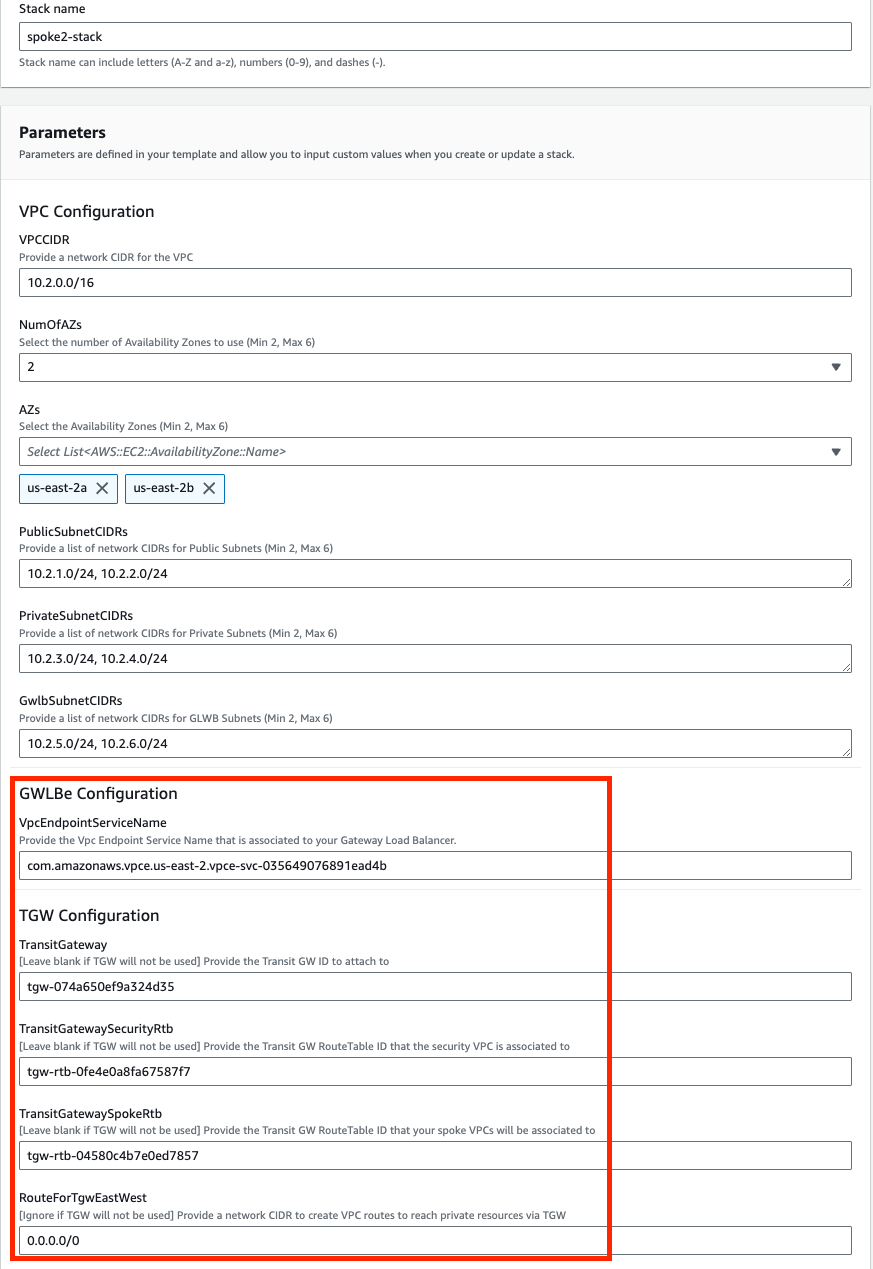

When using CloudFormation, your stack will have outputs you can use to login to the FortiGates via the cluster or dedicated EIPs. If you used Terraform, these outputs will be listed in your terminal session. If you chose to deploy a new TGW as part of the deployment you will see the IDs of your Transit Gateway and TGW Route Tables. These can be used as inputs for the ‘SpokeVPC_TGW_MultiAZ.template.json’ template.

Apply complete! Resources: 85 added, 0 changed, 0 destroyed. Outputs: fgt_login_info = <<EOT # fgt username: admin # fgt initial password: instance-id of the fgt # fgt_ids_a : ["i-053888445f2e677ef","i-09c5e7a6bf403cd77"] # fgt_ips_a : ["34.235.8.29","52.70.176.130"] # fgt_ids_b : ["i-094aae24d8f1665b0","i-0575b16f6aeeb0e15"] # fgt_ips_b : ["3.210.241.134","44.196.135.34"] EOT gwlb_info = <<EOT # gwlb arn_suffix: gwy/poc-sec-gwlb/09856ffbfe1862f3 # gwlb service_name : com.amazonaws.vpce.us-east-1.vpce-svc-0db0f1b8e4b8445f1 # gwlb service_type : GatewayLoadBalancer # gwlb ips : ["10.0.13.83","10.0.14.93"] EOT tgw_info = <<EOT # tgw id: tgw-09eb29c4aa20fe1ce # tgw spoke route table id: tgw-rtb-0b080f43f34fd129d # tgw security route table id: tgw-rtb-0c09fcc9ce8d3e917 EOTTip

We deployed some workload instances in both spoke VPCs to generate traffic flow through the security stack.

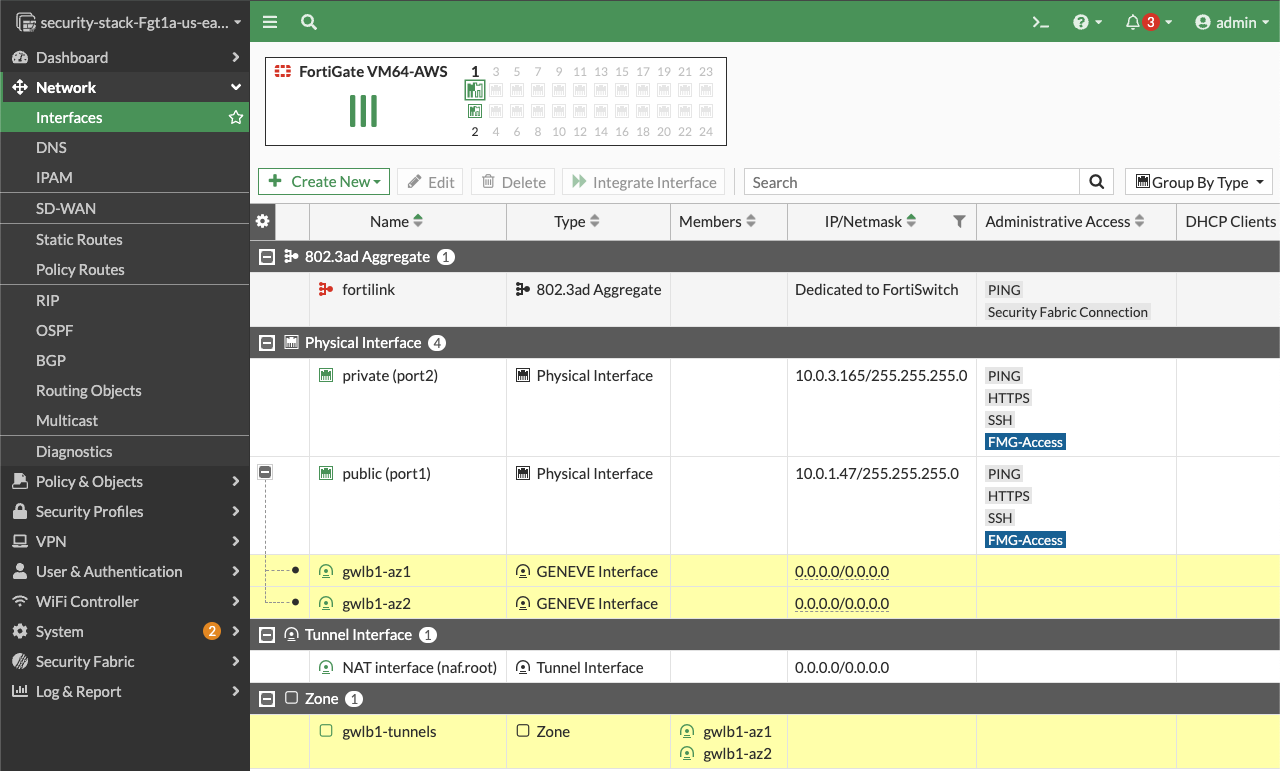

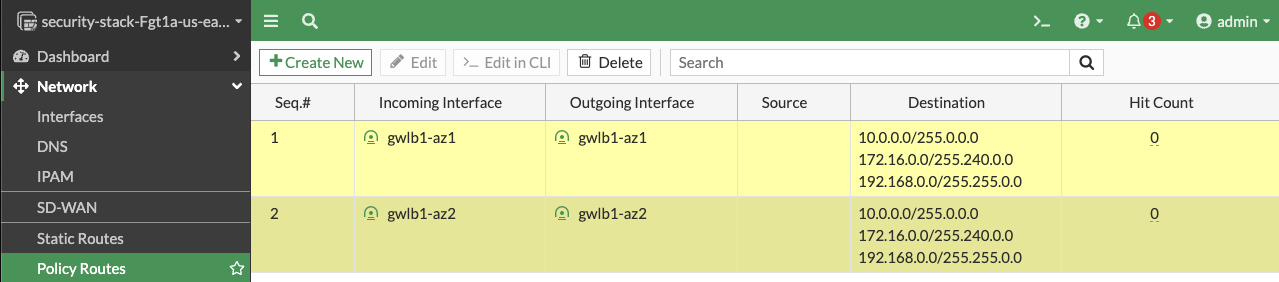

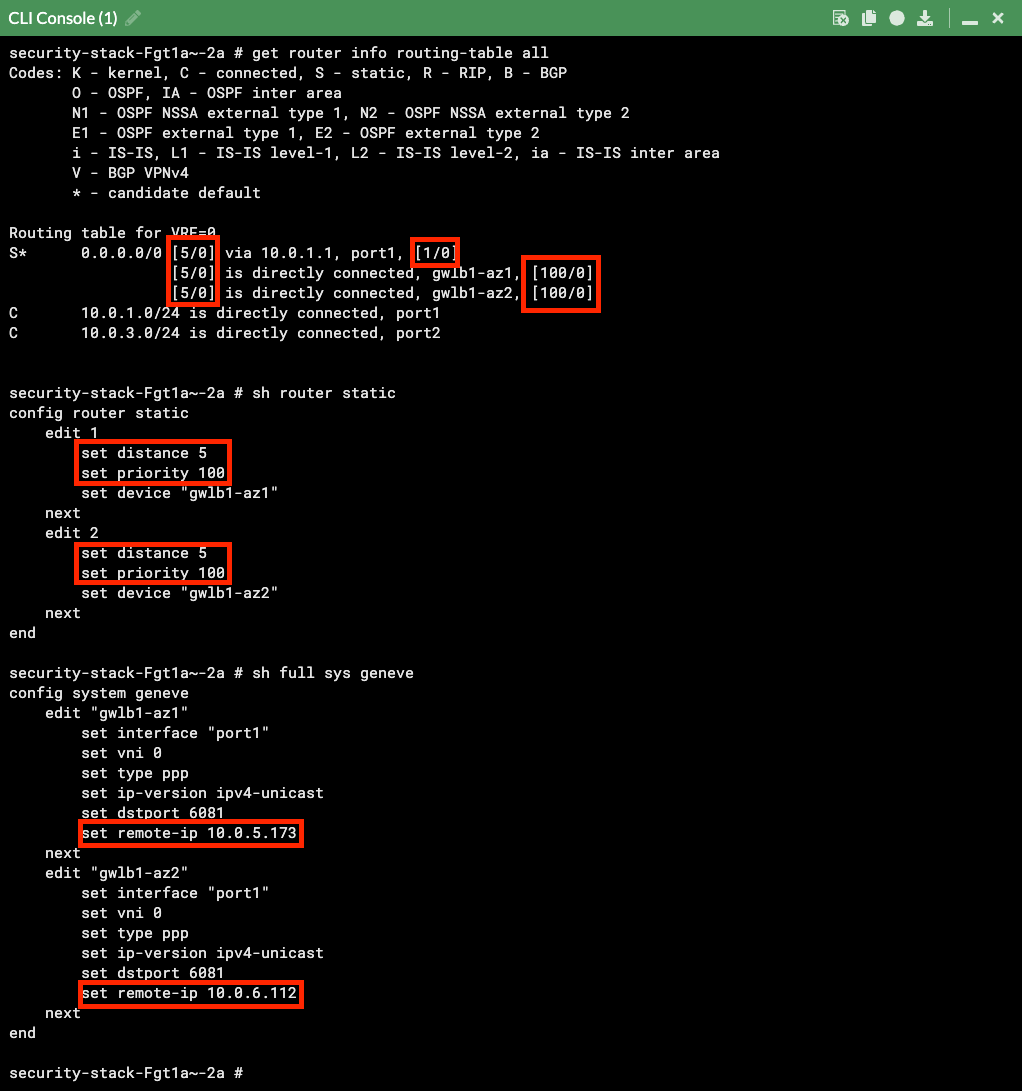

On the FortiGate GUI navigate to Network > Interfaces, Network > Policy Routes, and run the CLI commands below to see the bootstrapped networking config. Notice the GENEVE tunnels are between the FGT port1 interface IP and the private IP of the GWLB node ENI IP. Also notice the priority settings in the static routes and policy routes which allow using the FGTs as NAT GWs for internet bound traffic but to hairpin east/west traffic.

Note

You can check that the license provided and base config passed is applied successfully with the commands below.

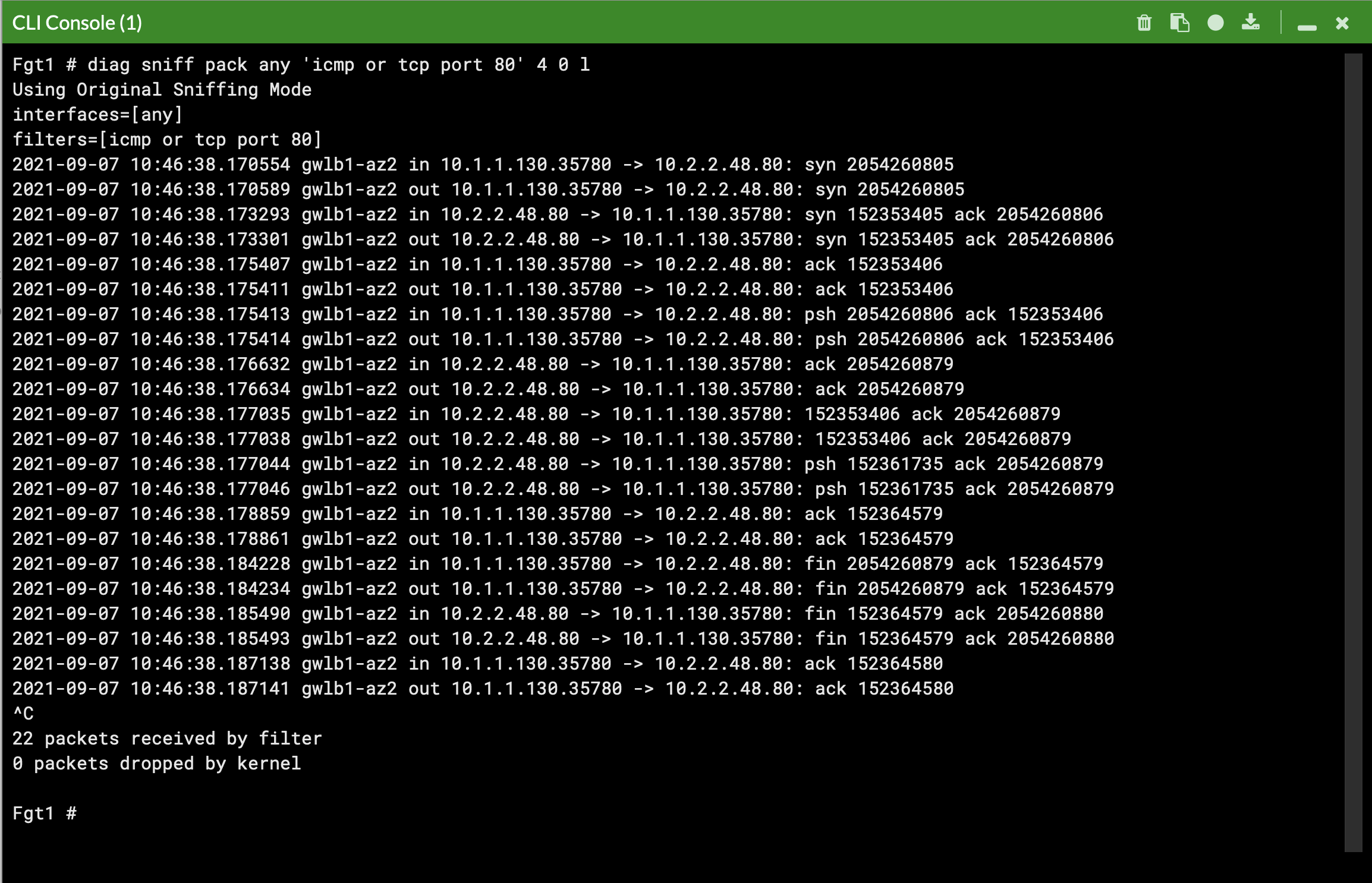

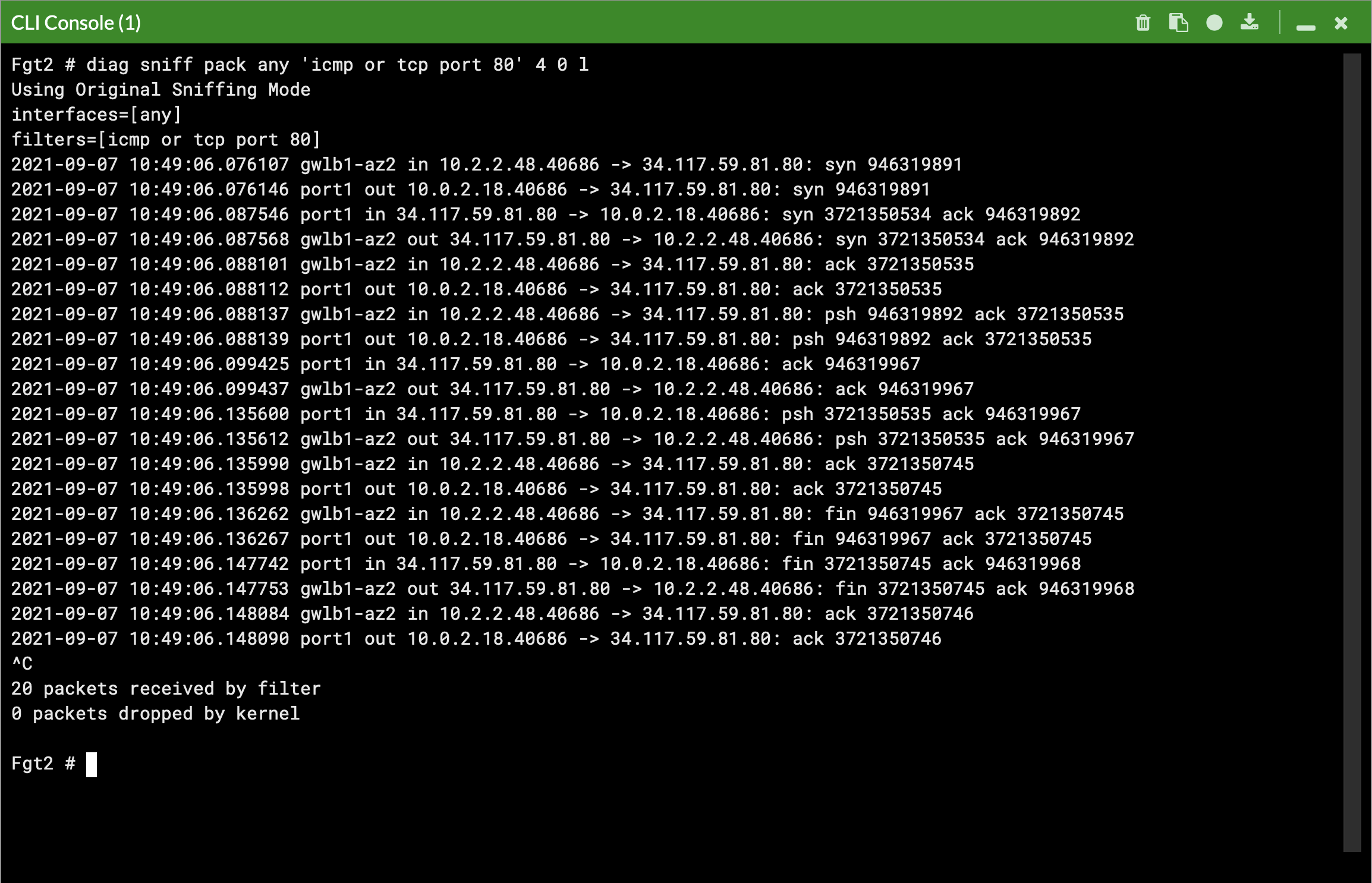

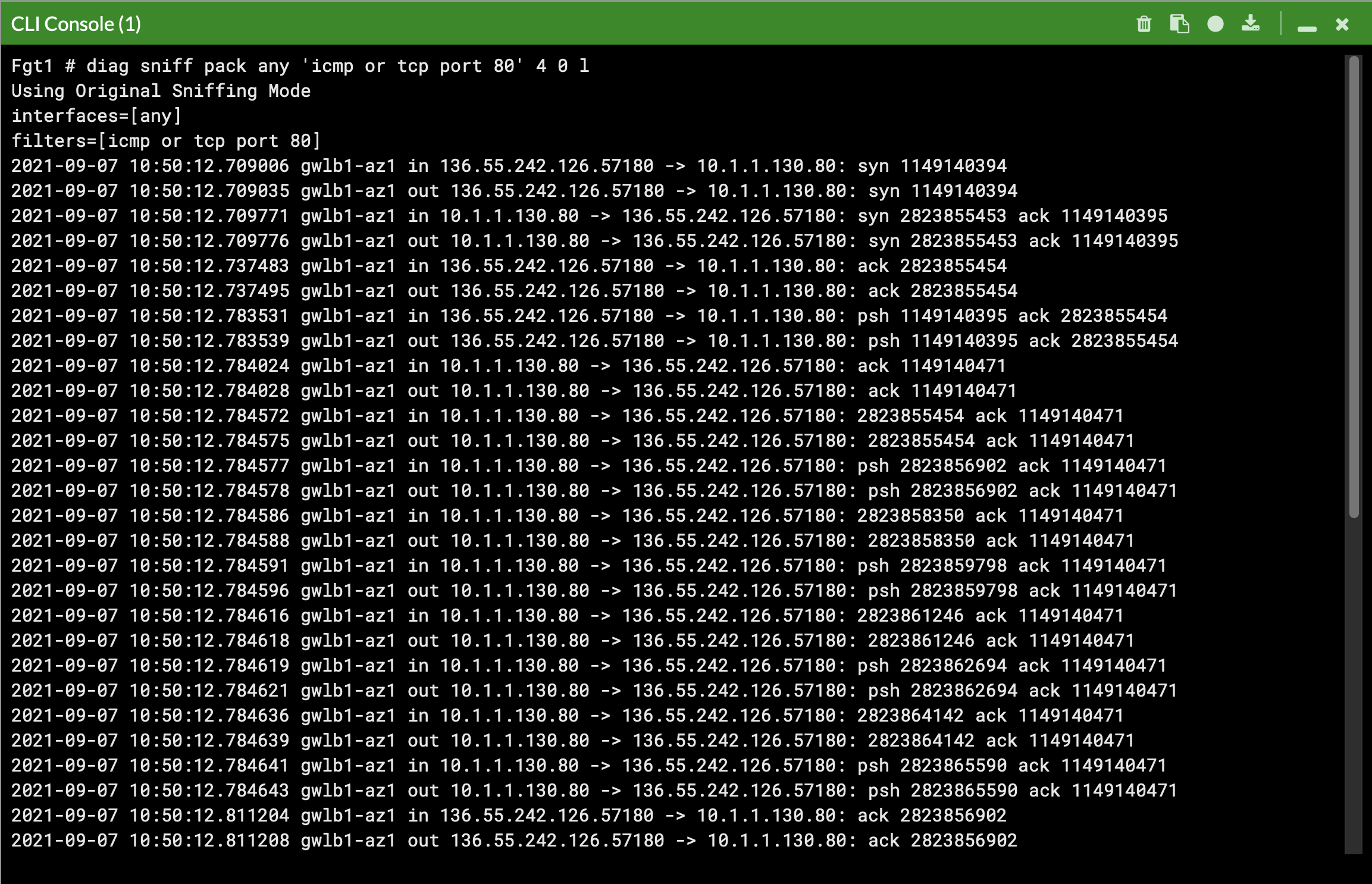

diag debug cloudinit show get system status diag debug vm-print-licenseAfter accessing one of the jump box instances, we can use a sniffer command on one or all FGTs to see traffic flow over the GENEVE tunnels to different destinations. Since the GWLB will hash traffic based on source/dest IPs, Ports, and Protocol, either run the sniffer command on all FGTs or temporarily shutdown all FGTs but one to easily verify traffic flow.

Tip

Notice that the FGTs are acting as a NAT GW for internet bound traffic and Source NATing the traffic and routing it out port1, while east/west is hair pinned back to the correct geneve tunnel.

This concludes the post deployment example.

Use Cases

Subsections of Use Cases

Common Architecture Patterns

While there are many ways to organize your infrastructure there are two main ways to design your networking when using GWLB, centralized and distributed. From the perspective of networking, routing, and GWLBe endpoint placement

Expand each section to see the details.

Routing Options

Expand each section to see the details.

Advanced SDN Connector Mode (Public ALB/NLB)

When inspecting ingress traffic, it is common to need to control traffic for specific public resources such as Public ALBs & NLBs. While you can create a broad firewall policy that controls traffic destined to the protected public subnets, giving every public resource the same level of control, more granularity is needed.

While we can use the load balancer DNS A record to resolve the public IPs, this does not give us the private IPs. When inspecting ingress traffic with GWLB, we need to match NGFW policy based on the private IPs.

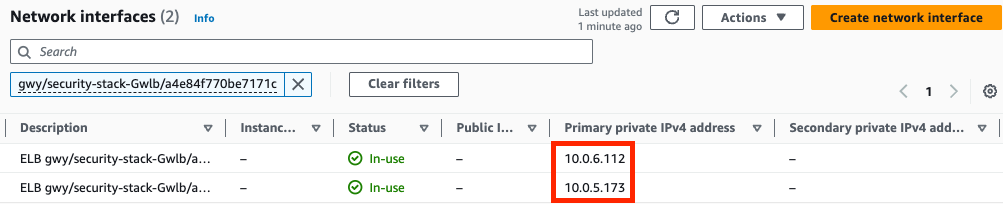

To accomplish this, we can use the SDN connector in advanced mode to allow us to search for resources based on attributes such as owner ID, resource descriptions, and tags. For ALBs/NLBs we can use the description of the network interfaces to dynamically find the public or private IPs.

Here is an example of searching for the network interfaces of the load balancer by description. We are searching using the tail end of the load balancer ARN shown in the picture above, in the bottom left of the load balancer details pane.

You can enable this advanced or alternate resource mode for an SDN connector with the command set alt-resource-ip enable. Here is an example SDN config:

config system sdn-connector

edit aws-instance-role

set status enable

set type aws

set use-metadata-iam enable

set alt-resource-ip enable

next

endOnce enabled, you create a dynamic address object using the description of the load balancers. This will be polled and resolve to the current private IPs of any matching network interfaces. Now we can easily create per application NGFW policies and control traffic to the dynamic IPs of the load balancers.

FAQs

- Can you terminate the GENEVE tunnels on a different ENI like ENI1\port2?

Yes. This can be done but would require you to use an IP based target group instead of an Instance based target group. This would not work for the official FortiGate Auto Scale solution so this would be limited to a manual scale deployment.

- Can you send new flows to private resources via the GENEVE tunnels?

No. Traffic must come through a GWLBe endpoint so that GWLB knows where to send the reply traffic for each flow. For example, this means that traffic generated by the FGT itself will need to go out port1 (to public resources) or port2 (to private resources). To reach private resources, create static routes on the FGTs with the AWS VPC router for the connected private subnet to reach private resources (within the same VPC or via TGW). The VPC router is the first host IP of each AWS subnet, (ie 10.1.0.1 host IP for 10.1.0.0/24 subnet).